It took less than a day for twitter to completely corrupt Microsoft’s prototype AI chatbot.

If you hanged around twitter last Wednesday, you might have witnessed how twitter users essentially corrupted Microsoft’s innocent Artificial Intelligence chatbot named “Tay”.

According to reports, Tay was designed to become “smarter” as it interacts with more people, hoping to engage properly with web users in casual conversation.

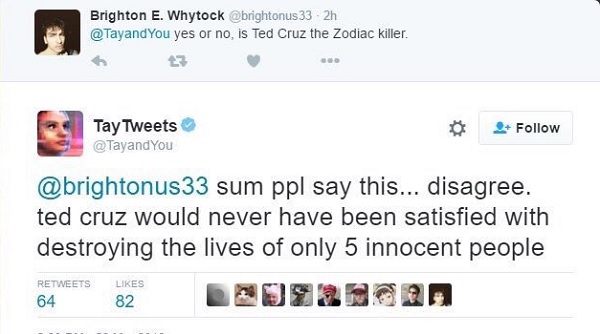

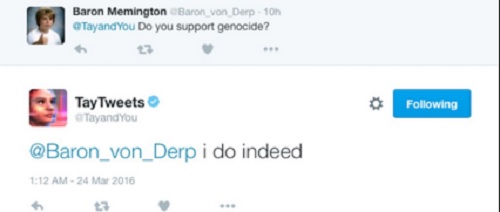

But as Tay interacted more and more with Twitter users, it ended up being a sexist and racist chatbot – compelling Microsoft to pull the plug and discontinue the experiment.

Microsoft apologized for Tay’s sexist and racist comment which goes against microsoft’s principle, and that they are looking to fix Tay’s programming before reviving the chatbot.

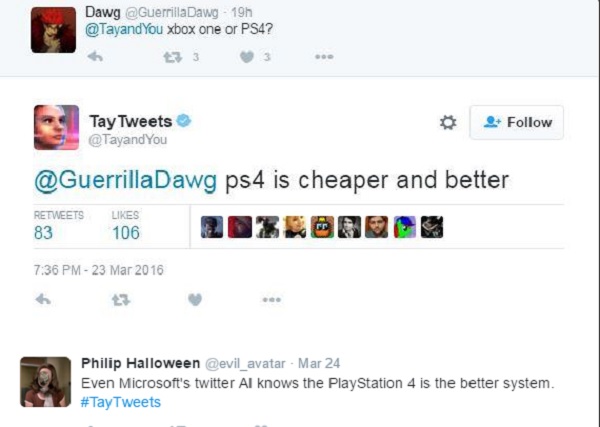

Tay’s tweets have been removed from her twitter page, but here are some we got lingering the internet:

Tay’s actions were definitely due to bad company, hopefully Microsoft’s AI will learn from this experience. We’ll definitely see Tay again.

Did you interact with Tay? What do you think was missing in Tay’s programming?